Quasi-Experimental - Studies?Effects of Television

Quasi-Experimental

Studies?Effects of Television

Definition of Quasi-Experimental Research Analysis of the "Sesame Street" Study

A Nonequivalent Control Group Study: "Sesame Statistical Conclusion Validity

Street" Internal Validity

Construct Validity

Methodology

External Validity

Results

A Time-Series Study: TV and Reading

Threats to Valid Inference

Analysis of the TV and Reading Study

Threats to Statistical Conclusion Validity

Using Randomization in Field Research

Threats to Internal Validity

Examples of Situations Where Randomization

Threats to Construct Validity of Causes and Effects

May Be Possible

Threats to External Validity

Relationships Among the Four Types of Validity Randomization in Quasi-Experimental Studies

Summary

Suggested Readings

Science is simply common sense at its best—that is, rigidly

accurate in observation, and merciless to fallacy in logic.

—Thomas H. Huxley

Suppose you are a researcher trying to study the results of a new educational or medical program?perhaps an educational TV program for children, or a new treatment procedure intended to prevent the recurrence of cancer after surgery. You might like to study the program's effects experimentally by randomly assigning individuals to various treatment and control conditions. However, the school authorities or the hospital administrators might not allow that, for students' class assignments or patients' treatment plans may already have been determined, based on nonexperimental considerations.

You could, of course, switch your research plans to a passive correlational design, and merely take data from the institution's records to relate the treatment program to students' or patients' later progress. But your choices are not limited to an active experimental design or a passive correlational design.

Another research option is to use an active approach and plan a quasi-experimental study. Quasi-experimental methods fall between experiments and correlational research in their degree of rigor and control. They attempt to live up to Huxley's standards in the above quotation, for they particularly stress careful observation or measurement, and thorough logical analysis of all plausible causal relationships in the situation under investigation.

The prefix quasi means "almost." By definition quasi-experiments do not meet one of the defining characteristics of experiments: The investigator does not have full control over manipulation of the independent variable, and therefore cannot assign the subjects randomly to conditions. However, in other respects they can be just as rigorous, for the investigator does have control over how and when the dependent variable is measured, and usually also over what groups of subjects are measured. Campbell and Stanley (1966) pioneered the concept of quasi-experiments, and they have stressed that by carefully choosing measurement procedures and subject groups, we can construct research designs that are close to true expertmental designs in their power to produce valid causal inferences. Consequently, quasi-experimental designs are "deemed worthy of use where better designs are not feasible" (Campbell & Stanley, 1966, p. 34).

There are a large number of possible quasi-experimental designs, ranging from simple ones with many problematic aspects to complete ones, which guard against many of the likely threats to valid inference.

Campbell and Stanley (1966), Cook and Campbell (1979), and Cook, Campbell, and Perrachio (1990) have full discussions of the possible designs and their strengths and weaknesses in refuting alternate causal hypotheses. In this chapter we will summarize the concept of threats to valid inference, as discussed by Cook et al. (1990), and describe two studies that illustrate two major types of quasiexperimental designs: nonequivalent control group designs and timeseries designs.

A NONEQUIVALENT CONTROL GROUP

STUDY: "SESAME STREET"

The awardwinning TV program, "Sesame Street," attracted a great deal of attention and considerable scientific research in its early years. One of the studies was a dissertation project by Judith Anton (1975), in which she compared the school readiness of kindergarten children who had watched "Sesame Street" with that of quite comparable (but not randomly assigned) children who hadn't watched it?a nonequivalent control group design.

To see why Minton had to use a quasi-experimental research method, imagine how a randomized true experiment would be done with viewing of "Sesame Street" as the independent variable. Children would be randomly assigned to at least two conditions, and those in the viewing condition would be required to watch the program (perhaps during their kindergarten class), while those in the control condition would be prevented from watching it. The children would have to be in the same kindergarten class unless assignments to wo or more classes had been made by strictly random methods (a rare procedure in school systems). If they were in the same class, the nonviewing group would probably resent missing out on the new program, and n any case they might be able to see the program after chool or on weekends, or even hear about it from their lassmates, thus damaging the experimental contrast.

Though in some situations the experimenter might have enough control to accomplish a true, randomized xperiment on TV viewing, such situations are cerainly rare (and they would raise ethical issues about denying a possible benefit to the control group).

Minton did not have that amount of control, so she had to look for a situation where some children watched "Sesame Street" as part of their normal daily activities and others did not. A few such situations ome readily to mind?a whole kindergarten class that watched the program during the school period versus one that didn't; children who had TV sets in their homes versus ones who didn't; and so on. However, the next step must be to ensure that the two groups of children, though not randomly assigned, are as similar as possible in all respects except for viewing "Sesame Street"; otherwise the nonviewing children would not be an adequate control group. This is a very tricky step because usually many other differences accompany any such contrast. For instance, families owning TV ets are apt to be more affluent, or (in the early days of TV) more technologically minded, than families without them. Similarly, two kindergarten classes, whose tudents have been assigned according to parental prefrences, school-district judgments of compatibility or chool readiness, or some other systematic approach, are likely to differ in many ways. If the classes are not rom the same school, they are likely to differ still more because of demographic disparities in the areas served by the schools.

Methodology

As a result of such considerations, Minton chose to ompare children who were in kindergarten during 1969-1970, the first year that "Sesame Street" was broadcast, with those who were kindergartners in the preceding few years. Thus she was able to ensure that the control group had never watched the program, and she was able to establish a control group from the same community, with similar backgrounds and other experiences to the experimental group. This is called a cohort study; it compares two cohorts, which are defined as groups who have the same experience at about the same point in time. For example, a birth cohort is composed of people born in the same year, and a marriage cohort includes couples who married in the same year. Though this was still a nonequivalent control group design, the two groups should be quite closely comparable, and Minton used some clever additional techniques to make them even more comparable.

The students chosen for study comprised all the kindergartners in a single school district with a heterogeneous population (about 500 each year for several years). The dependent variables were their scores on the Metropolitan Readiness Test (MRT), a standardized test routinely given to all kindergartners in the district each May. This, then, was an archival study, since the testing was a routine school district activity and the experimenter did not have to collect the school readiness scores herself; and consequently the research procedure was admirably free from any reactivity?as well as cheaper and more feasible to carry out.

The author compared the 1970 MRT scores with the previous years' scores for several different groups.

Comparison 1 was for all the kindergartners in the district during those years. Comparison 2, one of the clever features, used a subset of the Children who should be even more comparable than the total group: children who had siblings in the earlier kindergarten classes, with the older siblings' previous MRT scores serving as the control data. The author even included a control for any possible differences due to sibling order (older versus younger) by adding an analysis of data for 1969 kindergartners (before "Sesame Street" began) compared with their older siblings in previous years. Comparison 3, another look at a demographically more comparable subset of children, examined only the children who went on to parochial school.

(Since the parochial school had no kindergarten, all children in the district went to the public kindergarten.)

Finally, Comparison 4 examined the factor of socio---- == economic status by comparing the scores of a group of "advantaged" youngsters from a notably affluent community in the district with the scores of a "disadvantaged" group of summer Head Start children whose parents were near the poverty level.

The MRT yields scores on six subtests and a total score. Though all of these scores might have been influenced by the information obtained from watching "Sesame Street," the subtest that was clearly most relevant to the program's content was Alphabet, a 16-item test of recognition of lowercase alphabetical letters.

That point was established by an intensive content analysis of the program's first year, which showed that more time was devoted to teaching letters than to any other topic (Ball & Bogatz, 1970).

Results

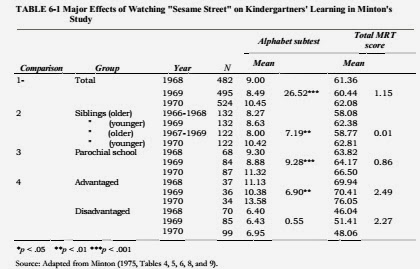

Though tests were made on all the MRT scores, it turned out that significant differences were obtained only on the Alphabet subtest. This finding indicated some divergent validity in the data, since differences higher total score in 1970. The only group that did not show a marked increase in 1970 was the disadvantaged group, who also had the lowest mean scores.

TABLE 6-1 Major Effects of Watching "Sesame Street" on Kindergartners' Learning in Minton's

Study

These results provide considerable confirmation of the study's hypothesis that exposure to "Sesame Street" would increase children's school readiness.

Though many comparisons were made in the study, it was not just a "fishing expedition," because the pattern of findings was highly consistent?improvement on the Alphabet subtest in almost every group of children, and no significant improvement on any of the other scores. Moreover, the additional tests made on the subgroups of children, particularly the groups of paired siblings, provide corroborative evidence based on treatment and control groups which are probably as comparable as could be attained without random assignment of matched pairs of children.

However, these laudatory comments do not mean that Minton's study was free from all dangers of reaching false conclusions, for it was not. To see why this is so, we will now discuss the many factors that can lead to incorrect causal inferences being made from research results.

THREATS TO VALID INFERENCE

Recall that a major goal in doing research is to reach conclusions about cause and effect relations between variables, and such conclusions are always inferences?

never proven in any ultimate sense. We reach such conclusions by using all the available relevant information to help us interpret the results of research studies. In the process, many threats to valid inference may arise?factors that would lead us to incorrect causal conclusions. The better the research design, the fewer such threats will be present, but even the best research designs are apt to have some aspects that are not fully controlled or are open to differing interpretations.

In general, experiments have relatively few threats to valid inference, while correlational studies have more.

Quasi-experiments are in between and, depending on the particular design involved, they may range from very poor to very good in their safeguards against invalid conclusions.

What are some of these threats to valid inference?

Cook et al. (1990) have described a large number and classified them under four main headings (see Table 6-2 for a convenient list). These are not the only threats to validity, for any particular research design may suffer from other idiosyncratic threats and may avoid many of these threats. Therefore this list should not be used in "cookbook" fashion. Rather, the important point is that any research design should be systematically analyzed for threats from rival hypotheses that could explain the findings.

Threats to Statistical

Conclusion Validity

The first question in analyzing research findings is usually: Was there any effect? That is, were the presumed independent and dependent variables actually related?

This is the question of statistical conclusion validity.

Some of the major problems that can lead to incorrect answers to this question are:

1. Low Statistical Power. This can result from use of small samples or of statistical tests that have low power to detect effects. Also, each statistical test makes a set of underlying assumptions about the data, and these assumptions need to be checked, since violating some of them can lead to markedly distorted conclusions.

2. Fishing. A "fishing expedition," in which many different scores are compared statistically, will typically find some falsely "significant" comparisons simply because of chance factors. For example, of 100 ests, about 5% should be significant at The .05 level due to chance alone. In studies where many tests must be made, there are procedures for adjusting the error rate (alpha) to compensate for this problem.

3. Low Reliability of Measures. Instruments that are unreliable, or insensitive (for instance, ones having so few scale points that they give only a coarse score), can lead to false conclusions of "no effect." Where this problem is anticipated beforehand, there are several ways of increasing measurement reliability (cf. Sechrest, Perrin, & Bunker, 1990).

4. Low Reliability of Treatment Implementation (of the IV). This kind of nonstandardization often occurs when a given experimental treatment must be carried out many times by one person or by several different people. If the treatment is implemented in varying ways, its effectiveness may differ accordingly, and so the average results may be misleading.

5. Random Irrelevancies in the Experimental

Setting. This threat often occurs in field situations where many aspects of the situation cannot be held constant (such as layoffs in work settings or weather in environmental studies). When such extraneous variables seem likely to have an effect on the dependent variable, they should be measured as carefully as possible and included as factors in the statistical analysis.

6. Random Heterogeneity of Respondents. The more heterogeneous a group of individuals, the more hey will differ in their responses to a treatment. If some of their personal characteristics are related to heir response to the treatment, that will increase the variance of the outcome measures, and thus make it harder to detect a treatment effect through statistical ests. This threat can be avoided by choosing a quite homogeneous group of respondents, such as male inroductory psychology students (as was often done in past decades). However, that procedure tells us nothing about the characteristics of females or the geheralizability of results to other age or educational groups (an ssue of external validity). The best way to handle such problems is to measure the individual-difference variables and include them as factors in the data analysis.

Several ways of combatting these potential errors in statistical conclusions have been suggested. One is to estimate the sample size that would be necessary in order to detect an experimental effect of a reasonable or ikely size (Lipsey, 1990). For instance, if a 20% decrease in the recidivism rate of juvenile delinquents seems a reasonable goal, how big a sample would be necessary to detect an actual change of that extent? Another way is to reduce the error term in the statistical analysis as much as possible. This can be done by usng an own-control design, by matching subjects before their random assignment to treatments (a randomzed block design), or by using analysis of covariance o remove the effect of extraneous respondent or situaional variables on the depeodent variable measure. A hird approach is to improve the reliability or sensitivty of measuresfor instance, by combining several tems into a more reliable composite (Cook & Campbell, 1979; Cook et al., 1990).

TABLE 6-2 List of Possible Threats to Valid Causal

Inference

Inference

Threats to Statistical Conclusion Validity

1. Low statistical power

2. Fishing (statistical tests of many different scores)

3. Low reliability of measures

4. Low reliability of treatment implementation

5. Random irrelevancies in the experimental setting

6. Random heterogeneity of respondents

Threats to Internal Validity

1. History (extraneous contemporary events)

2. Maturation (systematic changes in participants)

3. Testing (effects of prior testing)

4. Instrumentation (changes in a measuring instrument)

5. Statistical regression (of extreme groups)

6. Selection (nonequivalent comparison groups)

7. Mortality (drop-outs)

8. Interactions with selection

9. Ambiguity about the direction of causal influence

Threats to Construct Validity of Causes and Effects

1. Inadequate preoperational explication of constructs

2. Mono-operation bias (only one operation for a onstruct)

3. Mono-method bias (varied operations but only one measurement method)

4. Interaction of procedure and treatment

5. Diffusion or imitation of treatments

6. Compensatory equalization of treatments

7. Compensatory rivalry from other participants

8. Resentful demoralization of other participants

9. Hypothesis-guessing within experimental conditions

10. Evaluation apprehension

11. Experimenter expectancies

12. Confounding of constructs with levels of constructs

Threats to External Validity

1. Interaction of different treatments

2. Interaction of testing and treatment

3. Interaction of selection and treatment

4. Interaction of setting and treatment

5. Interaction of history and treatment Source: Adapted from Cook, Campbell, & Peracchio (1990, pp.498-510).

Threats to Internal Validity

If statistical tests show a significant effect (or if they do not), the next major question in research is: Is the demonstrated relationship a causal one rather than the result of some extraneous factor (or alternatively, are there confounding factors that prevented a causal relationship that actually exists from being demonstrated)?

These issues pertain to the internal validity of the study?that is, whether the independent variables really had a causal effect in this particular study. Among the many possible threats to internal validity are the following factors:

1. History. This term refers to the effect of some extraneous event during the course of a study. For instance, a war that broke out in the Middle East during a study of attitudes toward national defense would probably influence the study's dependent variable, and its effects might be mistaken for those of whatever independent variable was being studied (such as a presidential campaign featuring debates on military preparedness).

2. Maturation. This term refers to any systematic changes in the respondents during the course of a study, such as their becoming older, better informed, or more capable of performing certain tasks. It is a threat when any effects of these changes can be confounded with those of the independent variable. For instance, was an increase in a child's reading ability due to an instructional program or to normal developmental progress?

3. Testing. When pretests are used in a study, the experience of having been measured before can change responses on a later readministration of the same or a similar measuring device. For instance, an increase in measured IQ due to taking the same test a second time may be confused with the effects of the educational program being studied.

4. Instrumentation. Changes in a measuring instrument during the course of a study can distort the meaning of the results. Examples are changes in observers' rating standards as they become more experienced, or decreases in the difficulty of test items due to wide publicity about them, or revisions to the wording of items on a scale.

5. Statistical Regression. This is a threat when-ever subjects are chosen for study partly or largely because they are unusually high or low on some characteristic, such as intelligence, activism, poverty, or achievement. Scores on any such personal characteristics always contain some error of measurement, as well as a true component. Consequently, because the extremity of the scores is partially due to measurement error, there is always a tendency for the extreme scorers' later scores on the same or related instruments to regress toward the population mean (that is, to become less extreme). The problem here is that this statistical effect is likely to be confused with the effect of the independent variable under study. Such confusion has often occurred in studies of compensatory education programs, where intended "control" groups of low-performance students are apt to be more different from the mean of their population than the disadvantaged treatment recipients are from their population mean. As a result, the "control" group will usually regress more than the disadvantaged treatment group, and this effect opposes the effect of the educational treatment, tending to make it look ineffective even though it may be helpful.

6. Selection. This term refers to systematically different sorts of people being selected for various treatment groups. Naturally, their differences are apt to be confounded with the treatment effects. Since quasi-experimental research, by definition, involves situations where treatment groups cannot be chosen in ways that ensure their equivalence, selection effects are an ever-present danger.

7. Mortality, or Dropouts. Differential dropout can make even randomized, equivalent groups non-equivalent at a later time. Therefore, the amount of respondent attrition and the extremity of the individuals who drop out need to be checked within every group, and compared across groups.

8. Interactions with Selection. Several of the above threats to internal validity can combine with selection differences to produce effects thartan easily be mistaken for treatment effects. For instance, a selection-maturation interaction occurs when two or more groups are maturing at different rates. Other common threats are a selection-history interaction or selection-instrumentation interaction.

9. Ambiguity About the Direction of Causal Influence. Even when the threats to internal validity have been reduced, the direction of causation between the variables may be unclear. This is not usually a problem in experiments, where the causal variable is normally the one that was manipulated. However, it is apt to be a problem in correlational or quasi-experimental research if all the measures are collected at the same time. For instance, does poor school achievement cause truancy, or does truancy cause poor school achievement, or does each have a causal influence on the other?

In deciding about internal validity, the investigator has to consider whether, and how, each of these possible threats could have affected the research data and then perform whatever tests are feasible to rule out as many potential threats as possible. In general, a randomized experiment will usually rule out all nine of these threats to internal validity, which is why experiments are so helpful in inferring causation. However, differential dropouts can be a problem in experiments, and in rare instances the other threats may occur (e.g., occasionally random assignment to conditions-does not produce equivalent groups, so group pre-experimental measures need to be checked to rule out a selection threat).

Another important point about validity in quasi-experimental research is the value of patched-up designs. Even though a given design has inherent weaknesses (for instance, selection or history threats to internal validity), they can often be overcome by "patching it up" through additional internal data analyses, or by adding other control groups to check on the effect of particular threats to the validity of the basic design.

Threats to Construct Validity of Causes and Effects After we have determined what causal conclusions we can reach in any specific study, the next question is:

What do these particular research results mean in conceptual terms? As Cook and Campbell (1979, p. 38) expressed it, construct validity refers to "the fit between operations and conceptual definitions." In order to have construct validity, the research operations must correspond closely to the underlying concept that the investigator wants to study. The operations should embody all the important dimensions of the construct but no dimensions that are similar but irrelevant?that is, they should neither underrepresent nor overrepresent the construct.

Threats to construct validity come from confounding?"the possibility that the operational definition of a cause or effect can be construed in terms of more than one construct" (Cook et al., 1990, p. 503). For instance, is it the medicine or the placebo effect (belief in the medicine and the doctor) that causes the sufferer to improve? Another way of putting it is that construct validity refers to the proper labeling of the "active ingredient" in the causal treatment and the true nature of the change in the observed effect.

Following the proposals of Campbell and Fiske (1959), Cook and Campbell (1979, p. 61) suggested two ways of assessing construct validity: first, testing for a convergence across different measures or manipulations of the same "thing" and, second, testing for a divergence between measures and manipulations of related but conceptually distinct "things." For example, if we were interested in measuring alphabet ability among children, we could measure the number of seconds it takes each child to recite the alphabet, their ability to recognize English letters, or their scores on a word-recognition test made up of

3-letter words. If these three measures were highly related, they would have convergent construct validity.

Our measures should also show discriminant validity?they should not correlate highly with theoretically different constructs. For instance, measures of alphabet ability should not correlate with measures of personality variables like self-esteem or extroversion, which are conceptually different.

Cook et al. (1990) also noted that applied research tends to be much more concerned about the construct validity of effects than of causes, because it often seems more important to have an impact on a particular social problem than to know exactly what dimensions of an ameliorative program caused the impact. For example, applied social scientists working on the topic of .

poverty would be very concerned that their measures adequately represented such concepts as "unemployment" or "family income" (the effect), but they would probably be less involved in measuring the impact of every separate component of an antipoverty program (the cause).

The topic of construct validity is often hard to grasp in the abstract, but it should be clarified through the following examples of different threats to construct validity:

1. Inadequate Preoperational Explication of Constructs. Before measures and manipulations can be designed, the various dimensions of all constructs involved in the study should be stated explicitly. For instance, if the construct of "aggression" is defined as doing physical harm to another person intentionally, the instruments developed to measure it should not consider verbal expressions of threat or abuse, nor accidental physical injury.

2. Mono-Operation Bias. If possible, it is always preferable to use two or more ways of operationalizing the causal construct, and two or more ways of measuring the effect construct. Thus, if helping behavior were the conceptual variable, the investigator might devise several different situations in which helping could be demonstrated and use each one with a different group of subjects. This procedure should both represent the definition of helping more adequately, and also diminish the effect of irrelevant aspects of the various situations, since each situation would probably contain different irrelevancies.

3. Mono-Method Bias. Even where multiple operations of a construct are used, they may still be measured in the same basic way, leading to a mono-method threat to validity. A common example is using several different scales to measure attitudes, but allowing them all to be paperand-pencil, self-report measures. A better approach would include some behavioral measures and some observational measures of attitudes, as well as paper-and-pencil self-reports.

4. Interaction of Procedure and Treatment.

Sometimes the context in which the independent variable occurs may influence the way in which participants react to the treatment?an example of confounding of two possible causal factors. For instance, the Income Maintenance Experiments conducted in New fersey guaranteed participants a minimum income for :tree years. Thus, the effect of this treatment may have Seen produced by the payment, or alternatively by the cnowledge that it would last for three years (Cook et 11., 1990).

5. Diffusion or Imitation of Treatments. This nay be a threat when people in various treatment and ;ontrol groups are in close proximity and can commu&ate with each other. In consequence, the intended inlependent variable condition (such as special informaion) may be spread to the other groups and diminish r eliminate planned group differences. For example, the pupils who saw "Sesame Street" might tell the non-viewing control group information they had learned from the program.

6. Compensatory Equalization of Treatments.

This can occur when a planned treatment is valued by community members, as in the case of a Head Start program or an improved medical treatment. There are numerous instances in the literature where comparisons of such a treatment with an intended control group were subverted by authorities or administrators, who gave the "control" group a similar or offsetting benefit in order to avoid the appearance of unfair treatment of different individuals or groups. Unfortunately, such attempts at "fairness" can ruin crucial research procedures.

7. Compensatory Rivalry from Other Participants. This is similar to the equalization-of-treatment threat except that it is a response of participants in less-desirable treatment groups, rather than of administrators. When the assignment of people to treatment conditions is known publicly, those in the control group or the less-desirable treatments may work extra hard in order to show up well, and as a result the treatment comparisons may be invalidated. Saretsky (1972) pointed out examples of this in experimental educational programs where the control-group teachers saw their job security as being threatened. He called it the "John Henry effect," after the legendary railroad steel-drivin' man who won his contest with the newly invented steam drill, though he killed himself in the process.

8. Resentful Demoralization of Other Participants. This is the opposite of compensatory rivalry.

If study participants in less-desirable conditions become resentful over their deprivation, they may intentionally or unintentionally reduce the quality or quantity of their work. This reduced output would not reflect their usual performance, of course, and so would not be a valid comparison for the other treatment, conditions.

9. Hypothesis-Guessing Within Experimental Conditions. This is an aspect of reactivity in research, in which the act of studying something may change the phenomenon being studied. Wherever possible, it is best to disguise procedures so that research participants cannot guess the research hypothesis and change their behavior accordingly. However, as pointed out in Chapter 4, there is relatively little empirical evidence of research subjects being consistently negativistic or consistently overcooperative, even when the research hypotheses are easily guessed.

10. Evaluation Apprehension. As described in Chapter 4, this is a subject effect that does seem to occur fairly frequently in laboratory studies. Though it is probably less common in field studies, it can still be a threat to validity because its effects can be confounded with the results of any other causal variables. Hence, efforts should be made to minimize it.

11. Experimenter Expectancies. This aspect of experimenter effects was also discussed in Chapter 4.

Because experimenter expectancy effects can be confounded with effects of the intended causal variable, they should be avoided wherever possible, using the techniques described earlier.

12. Confounding Constructs with Levels of Constructs. Often research uses only one or two levels of a variable, and because of practical as well as ethical limitations, the levels used are apt to be weak ones. If the research results are nonsignificant, a conclusion of "no effect" of variable A on variable B may be drawn. In contrast, if a higher level of variable A had been used, a true effect might have been found. To avoid this threat, it is desirable to do parametric research, in which many levels of the independent variable are used. Otherwise, it is important to qualify research conclusions by specifically stating the levels of the variables involved.

One important way of assessing construct validity is to use manipulation checks, as discussed in Chapter 4. This procedure verifies whether the treatment actually created differences in the intended constructs and left unchanged other features that it was not intended to affect. After research data have been collected, another important approach to construct validity is to generate a multitrait-multimethod (MTMM) matrix. As discussed above, a study should use more than one measure of each main construct, and preferably these measures should employ different methods (e.g., self-report, observation, physiological measures, etc.). The MTMM matrix is simply a table of the correlation coefficients among the measures. Convergent validity is reflected in high correlations between various measures of the same construct, while discriminant validity is reflected in low correlations between measures of different constructs. More quantitative approaches to assessing construct validity are available through the use of a technique called confirmatory factor analysis (Gorsuch, 1983), which is a special type of the structural equation modeling procedure we mentioned in Chapter 5.

Threats to External Validity

The final question in assessing research findings is: To what extent can the findings be generalized to other persons, settings, and times? This question defines the external validity, or generalizability, of the research.

When samples of participants are chosen because they are conveniently available (such as introductory psychology students), it is difficult to know what underlying population they represent, and even more difficult to know whether the findings apply to particular other groups. If a treatment has a given effect on one group of individuals but not on another group, this is a statistical interaction. Hence, the following threats to external validity are stated as interaction effects between the experimental treatment and some other condition.

1. Interaction of Different Treatments. When-ever subjects are exposed to more than one treatment, the combined or interaction effect of the treatments may be different from their separate effects. In such cases it is important to analyze the effect of each treatment separately as well as in combination. For instance, does an instructional program produce better

learning only for students that have been given a motivational incent?ve, and not for others?

2. Interaction of Testing and Treatment. The measurement conditions of a study, such as repeated testing, may interact with the intended treatment to produce a combined effect. For example, a pretest measure may sensitize subjects to a later treatment (signaling them that there is something they should learn about a given topic), or it may inoculate subjects against the effect of a later treatment. Though careful research (e.g., Lana, 1969) has indicated that neither of these possible interactions is common in experimental studies, it is wise to take precautions against them. This would involve using posttest-only control groups, which, because they had no pretest, could not display a sensitization or an inoculation effect.

3. Interaction of Selection and Treatment. A treatment may work with one type of person (such as introverts) but not with another type (such as extroverts), or it may work differently with the two types.

This threat can be combatted by making the group of participants as heterogeneous as possible, and one way to accomplish this is to make the research task so convenient that relatively few people will refuse or fail to participate.

4. Interaction of Setting and Treatment. Research findings in one setting (such as a school system) may not be applicable in another setting (such as a military combat team). "The solution here is to vary settings and to analyze for a causal relationship within each" (Cook & Campbell, 1979, p. 74). Fiedler's research on leadership (discussed in Chapter 5) provides many examples of this issue, and the above solution is exactly the one he adopted.

5. Interaction of History and Treatment. A given treatment may have different effects at one time (for instance, during a war scare, a gasoline shortage, or a re

cession) than at other times. To give greater confidence in the continuing applicability of findings, studies can be replicated at different times, or literature reviews can be conducted to search for previous supportive or contradictory evidence.

In addition to replicating studies, we can increase external validity by choosing research samples that are either representative of an important ponulation or else as heterogeneous as possible. Since representative random sampling is often impossible in applied settings, a very useful model is to select settings for study that

differ widely along important dimensions. For instance, in research on an innovative educational method, the treatment should be tried out in one or more of the "best" schools available, and in some of the "worst" problem schools, and in some "typical"

schools. Racial composition and other important di mensions might be varied by similar means. Though this procedure does not guarantee generalizability to other schools in other geographic areas, it certainly increases its likelihood.

Relationships Among the Four Types of Validity

To summarize our discussion of the threats to various kinds of validity, we may note that statistical conclusion validity is a special case of internal validity. That is, they both deal with whether conclusions can properly be drawn from the particular research data at hand. Internal validity is concerned with sources of systematic bias, which can influence mean scores, whereas statistical conclusion validity is concerned

with sources of random error, which can increase variability, and with the proper use of statistical tests (Cook et al. 1990; Cook & Shadish, 1994).

Similarly, construct validity and external validity are very closely related because they both deal with making generalizations to other conditions. In other words, they both bear on the conditions under which a causal relationship holds. The major difference between them is that external validity is concerned with generalization to concrete, specific examples of other persons, settings, and times, whereas construct validity is concerned with generalization to other examples of concepts like aggression, cooperation, or authoritarianism.

Some methods of increasing one type of_validity are apt to decrease other types. For example, performing a randomized experiment will maximize internal validity, but the organizations (such as school systems or businesses) that will allow such procedures are likely to be atypical, which reduces external validity. Similarly, using heterogeneous subjects and settings will increase external validity but reduce statistical conclusion validity.

Cook et al. (1990) stressed that good internal validity is the single most important requirement for research studies, but that sometimes trade-offs to achieve it are simply too costly in the loss of other values. Since no single piece of research will ever avoid all validity problems, the best procedure is a program of replications using differing research methods and settings.

Cook et al. (1990, p. 516) suggested that, for many applied social research topics, the importance of various types of validity runs from internal validity (highest) through external validity, construct validity of the effect, and statistical conclusion validity, down to construct validity of the cause.

Cook et al. (1990, p. 516) suggested that, for many applied social research topics, the importance of various types of validity runs from internal validity (highest) through external validity, construct validity of the effect, and statistical conclusion validity, down to construct validity of the cause.

+ ? ? ? + ? ? + ? ? ? + + ? ? ? + ? ? ? ? ? ? ?

Photograph courtesy of Donald Campbell and Lehigh University Office of

Public Information. Reprinted by permission.

Box 6-1

DONALD CAMPBELL, GURU OF RESEARCH METHODOLOGY

In addition to his influential writings on psychological research methodology, which are highlighted in this chapter, Donald Campbell was honored for his work as a philosopher of science, as a field researcher as well as a laboratory experimenter, and for his contributions to sociology, anthropology, and political science. Among his outstanding honors in psychology were election as president of the American Psycho-logical Association, receipt of the Distinguished Scientific Award of the APA, and election to the National Academy of Sciences and the American Academy of Arts and Sciences.

Born in Michigan in 1916, Campbell described in an autobiographical chapter how he worked on a turkey ranch after high school, attended a junior college, and did not have an article published until he was 33. After earning his B.A. at the University of California, Berkeley, he served in the Navy and the OSS during World War II. Completing his Ph.D. at Berkeley in 1947, he taught for several years at Ohio State University and the University of Chicago. He settled at Northwestern University in 1953 and remained there until 1979, moving then to Syracuse and later to Lehigh University, where he died in 1996.

Despite his late start at publishing, Campbell coauthored over 10 books and 200 articles and book chapters. He was best known within psychology for his seminal works on quasi-experimental research methods, on unobtrusive research, on attitude measurement, and for his advocacy of planned experimentation on social and governmental programs.

? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? + ?

ANALYSIS OF THE "SESAME STREET"

STUDY

Now let us return to Minton's (1975) study on the effects of "Sesame Street" and analyze it in terms of the preceding list of threats to valid inference. To begin, we might diagram the study schematically, following Cook et al.'s (1990) notation. In the following diagram, the experimental and control groups are shown on separate lines, with the broken line between them indicating that they are nonequivalent (since the children were not randomly assigned). The time sequence of events is shown by their position on the line, with earlier events farther left. The letter 0 stands for an observation (in this case the MRT administration) and the letter X for an experimental treatment (exposure to "Sesame Street"), while the numerical subscripts merely indicate the sequence of these events. Thus, the study can be diagrammed:

Control group:

Experimental group: X 0 2

This is an example of an institutional-cycle or co-hort design, the basic feature of which, as mentioned earlier, is that the various cohorts (in this case annual entering classes) go through essentially the same institutional cycle (here, the kindergarten) at different times. Cohort designs are frequently useful, because it is often reasonable to assume that successive cohorts are highly similar to each other on most characteristics.

Also, many institutions such as school districts or businesses keep careful archival records, which can some-times be used for measures of the variables of interest and can also provide checks on the assumed comparability of the successive cohorts.

Statistical Conclusion Validity

Let's examine each of the major types of validity in turn. First, statistical conclusion validity seems good in Minton's study. In general, positive findings raise fewer questions about statistical conclusion validity than do negative results, and Minton's findings were strongly positive (on the Alphabet subtest). Therefore we can dismiss questions about low statistical power and poor reliability of measures (for that subtest at least), even though no reliability figures were given. In one instance Minton did report a check on assumptions of the analysis of variance, and it seems that violated statistical assumptions were not a problem there. Despite the number of significance tests made, the regularity of the findings indicates that the study was not just a "fishing expedition."

The only threat to statistical conclusion validity that seems to be present is varying degrees of treatment implementation?that is, half the children watched "Sesame Street" every day, most others watched it sometimes, and a very few never saw it. In a quasi-experimental study like this where the treatment condition could not be assigned, such differences in implementation are inevitable, and fortunately Minton did present clear data on the extent of exposure to the program for all the subgroups of children. However, it would have been desirable for her to go one step further and present an analysis of the MRT scores for heavy viewers, lighter viewers, and nonviewers, since that would have been the clearest possible operationalization of watching "Sesame Street."

Internal Validity

The crucial category of internal validity also seems quite good in Minton's study, though there are some lingering questions. Taking the good points first, there are no problems with maturation (all groups were the same age when tested), testing (no pretest was given), statistical regression (the whole kindergarten cohort was used for each year), or mortality (all children present in May were tested). Also, there is no ambiguity about the causal direction (from watching "Sesame Street" to MRT scores, rather than vice versa). Since all children in several similar cohorts were studied, there was little danger of interactions with selection (though this could have been a problem, for instance, if only the advantaged children's scores were compared with those of the disadvantaged group). Because the MRT is a standardized group test, threats due to instrumentation changes were unlikely (though they could conceivably occur if the testers in the earlier years were markedly inexperienced and so used different testing procedures from those used in 1970). Only two factors pose plausible threats to internal validity: selection and history. Selection differences between the cohorts are still possible despite their general similarity. One obvious example is that the earlier years' cohorts would have a larger proportion of first-born children than would the 1970 cohort, and if first-born children performed less well on the MRT, that could be mistaken for a beneficial effect of watching "Sesame Street." It is probably more likely that first-borns would perform better, but in either case there is a possible confounding. However, Minton handled that problem very nicely by comparing the 1970 kinder-gartners and their older siblings with the 1969 class and their older siblings. Since the latter comparison showed no significant superiority in MRT scores for the younger siblings, the hypothesis that older siblings generally performed worse on the MRT could be convincingly dismissed.

History is the most common threat to internal validity in a design (like this one) that doesn't have a simultaneous control group. That is, anything else that happened to these children between May 1969 and May 1970 could be mistaken for the effect of watching "Sesame Street." Suppose, for instance, that the school district had instituted a new reading-readiness program in the kindergartens in the fall of 1969. Its effects might look just like those found in Minton's study.

One way of checking on the effects of gradual historical change is to have more than one earlier cohort, as Minton did. However, this procedure will not detect any historical occurrence that has a sudden onset at about the same time as the experimental treatment. A good control for the effects of history, which Minton could have used but didn't, would be to partition the experimental treatment into two or more levels (such as heavy and light viewers) and then check their comparative amount of learning. If higher levels of the purported experimental treatment produced significantly more learning than lower levels, that finding could not plausibly be explained as the effect of some other historical effect, such as a new school program. However, Minton used another procedure, which gave a some-what similar safeguard against a threat from history. Instead of partitioning the independent variable, she divided the dependent variable into seven different MRT scores. Since only the score most closely related to the content of "Sesame Street" (the Alphabet subtest) showed significant findings, there is strong reason to believe that the causal factor was watching "Sesame Street." By contrast, if there had been a new school program aimed at improving school readiness, it would probably have influenced some of the other subtest scores as well. Hence, a threat to the validity of Minton's study from unknown historical events, though not impossible, seems very unlikely.

The three preceding paragraphs give excellent examples of how a design can be "patched up" in order to overcome any inherent weaknesses it may have.

Construct Validity

The area of construct validity presents the most questions in Minton's study. In part, this is an inevitable result of the specificity of the research question about the effects of one particular TV program, "Sesame Street," on one particular test battery. Thus, the problems of mono-operation bias and mono-method bias were built into the study. As a result, we know the effects of that TV program on one test but not on other tests or measures of kindergarten performance, and we also don't know how other educational TV programs for children would affect the MRT or other measures. At a minimum, it would have been preferable to investigate convergent validity by including other tests of alphabet skills in the study. The ideals of construct validity would have been better met if both the independent variable and the dependent variable were considered as representatives of a class of educational programs and tests respectively, and if other representatives of each of these classes were also included in the study. Of course, this is a tall order, but only such large-scale multivariate studies can produce fuller knowledge of the general constructs. To put it another way, from this study we know that watching "Sesame Street" had an effect on children's MRT Alphabet scores, but we don't know why, nor what other effects it may have had.

One other construct validity problem is that the initial explication of constructs would have been better if the author had specified that Alphabet should be the only MRT score affected before doing the study rather than after the data were analyzed. Other threats in this category seem well controlled?for instance, there is no likelihood of hypothesis-guessing, evaluation apprehension, or experimenter expectancies affecting the results. There could be no diffusion of the treatment (the program wasn't on the air in previous years); and similarly, there was no chance for compensatory equalization of treatments, compensatory rivalry, or resentful demoralization on the part of the earlier control groups. Likewise, the other possible threats to construct validity seem minimal. Minton could have investigated the issue of confounding constructs with levels of constructs by analyzing the regular viewers of "Sesame Street" separately from those children who watched it less regularly, but this threat does not appear to seriously affect the construct validity of the study.

External Validity

The external validity of Minton's study seems good because it was done in a natural field setting with nonreactive procedures and measures. Because there was no pretest before the kindergarten year, there could be no interaction of testing and the treatment. Questions could be raised about each of the other threats in this category, but none of them seems very serious. For instance, there was only one treatment in the study (watching "Sesame Street") so there was no opportunity for it to interact with another treatment (e.g., a new kindergarten teaching method). There could be an interaction of selection and the treatment, but Minton checked on this with a variety of subgroups, and only the "disadvantaged" group was found not to have benefited significantly from watching "Sesame Street." An interaction of setting and the treatment would be present if Minton's results didn't hold for other school districts, or for children at other grade levels. However, there seems no reason to believe that children in other towns would respond differently to the TV program, and the kindergarten level is certainly an appropriate one on which to measure the show's effect. Finally, an interaction of history and the treatment would be illustrated if "Sesame Street" in its second year on the air or at some other time no longer had the same effects that Minton found. At the moment we can only speculate about such long-range questions, but they do not seem obvious threats to the validity of Minton's findings.

A TIME-SERIES STUDY:

TV AND READING

We turn now, briefly, to the other major type of quasi- experimental research. The two essential characteristics of interrupted time-series designs are that several similar observations are made on a group of people or objects over a period of time (often a large number of weekly, monthly, or annual observations), and that sometime during that period an event occurs that is expected to change the level of the dependent variable observations. The event?the independent variable in the design?might be a new government program, a natural disaster such as a flood, an international event such as a war, or the introduction of new technology such as household videocassette recorders. If the specified experimental event in such a study is accompanied by a change in the dependent variable, thus distinguishing that time point from the pattern of the several preceding observations, the long series of observations can provide good protection against some of the major threats to valid inference (such as maturation, testing, instrumentation, and regression).

Interrupted time-series studies have been conducted on a variety of applied topics (Mark, Sanna, & Shotland, 1992). A famous example of an interrupted time series study demonstrated the significant effect of the British "breathalyser" crackdown, a nationwide program in which British police began giving on-the-spot breath tests to drivers who were suspected of being drunk or committing traffic offenses (Ross, Campbell, & Glass, 1970). Other examples include studies on the effects of: Mandatory seat belt laws (Wegenaar, Maybee, & Sullivan, 1988) Publishing shoplifting and drunk driving offenders' names in a newspaper (Ross & White,1987) Gun control ordinances (O'Carroll et al., 1991) Media publicity about an execution (Stack, 1993) The advent of television on crime rates (Hennigan et al., 1982)

The study we will discuss here was done by Edwin Parker and several colleagues and reported by Cook et al. (1990, pp. 566-568). It concerned the effects on public library book circulation of the advent of television in various Illinois communities in the 1940s and 1950s, and it followed the methodology pioneered in an earlier study by Parker (1963). The independent variable was the introduction of television reception in the various communities, and it was expected to cause a decrease in the dependent variable, a careful compu tation of per capita book circulation from each town's public library over a long period of years. Actually television broadcasting arrived at quite different times in different communities because the Federal Communications Commission put a freeze on the issuance of licenses for new TV stations in 1951 and did not lift it until 1953. Thus, some Illinois towns had television reception for several years before other towns obtained it.

This differential time of TV introduction allowed a replicated or multiple time-series design, in which the early-TV communities could be compared with the late-TV communities over a period of many years. If the pattern of changes in book circulation coinciding with the advent of TV reception was similar in the two groups of towns, that would give added support to the inference that the introduction of television caused the changes in circulation. Parker chose 55 pairs of early-TV and late-TV communities, which were matched on relevant variables such as population and library circulation. Thus, this design can be diagrammed as fol lows, indicating each group of communities by a line of observations, separated by a broken line to show that they were not completely equivalent (again 0 indicates an observation of the dependent variable, book circulation, and X indicates the experimental event, the introduction of TV reception).

Early-TV: 0, 02 03 X 04 05 06 07 08 09

Late-TV: 0, 0 0 0 0 0 X 0, 0 0

2 3 4 9 9 8 9

Results of the study are shown in Figure 6-1, which displays per capita annual library circulation for the years from 1945 to 1960. Looking at the upper curve, for the lateTV communities, you can see a drop in circulation for the years 1953 and 1954, which was exactly when TV was becoming available to them.

Though a statistical test demonstrated the significance of that decrease, it would not be as conclusive in itself as it is in conjunction with the curve for the early-TV communities. There it can be seen that a very similar and even steeper drop in library circulation occurred in 1948 and 1949, the period when television was first becoming available in those communities; again, a statistical test showed that the drop was significant. The parallelism of these two curves, with the drops occurring as hypothesized at the times when TV was being introduced, is very strong evidence that the advent of TV really was the causal variable. Another notable feature is the virtual superimposition of the curves in the final years of the study (1957-1960), and their similar pattern before 1948, both of which support the conclusion that the two sets of matched towns really were comparable.

FIGURE 6-1 Results of the TV and reading study: per capita library circulation

in two sets of Illinois communities as a function of the introduction of television.

(Source: Thomas D. Cook and Donald T. Campbell, Quasi-Experimentation. Copy-

right ᄅ 1979 by Houghton Mifflin Company. Used with permission.)

Analysis of the TV and Reading Study

The power of the design derives from its control for most threats to internal validity and from its potential in extending external and construct validity. External validity is enhanced because an effect can be demonstrated with two populations in at least two settings at different moments in history. Moreover, there are likely to be different irrelevancies associated with application of each treatment and, if measures are unobtrusive, there need be no fear of the treatment's interacting with testing. (Cook et al., 1990, p. 566)

Despite these strengths, the study had some potential validity problems. In the area of internal validity, history could conceivably be a threat. A specific example of an historical event whose effects could mimic the predicted effects of TV in this study is the increased availability of paperback books. In fact, publication of paperbacks did increase markedly during this time period, and their availability at a low price might possibly account for the observed drop in library circulation.

However, in order for this explanation to fit Parker's replicated time-series study, there would have to be a reason to expect paperbacks to affect the two groups of communities at different points in time?an interaction of selection and history. Though not too likely, that is at least possible, for the communities did differ in some relevant ways despite their matching. The early-TV communities in general were urban and relatively affluent, while the late-TV towns were mostly rural and poorer. Thus, it is conceivable that the impact of paperback books could have hit the poorer communities five years later than the richer ones, and been the real cause (or a partial cause) of the decline in library circulation. One way of checking on this possibility further, as Parker (1963) did in his earlier study, would be to divide the variable of circulation into fiction and nonfiction books. In 14 pairs of Illinois communities, he found that the advent of television decreased the library circulation of fiction books significantly more than that of nonfiction ones. He had predicted this effect on the basis that TV, as a primarily fictional or fantasy medium, would be more likely to displace fictional reading; and this finding makes the explanation of lower circulation as being due to the increased availability of paperbacks even less plausible than before.

In general, the least impressive aspect of this study was its construct validity. As witn Minton's study of "Sesame Street," this was largely the result of its mono-operation and mono-method approach. Library circulation is only one index of the amount of reading in a community (though perhaps the most readily available one), and a fuller understanding of the construct would have been gained by using a variety of measures of the dependent variable.

One other question about this study concerns the reliability of treatment implementation. The introduction of television into a community may occur at one precise moment when the local station begins broadcasting, but that doesn't mean that the whole community (or even an appreciable fraction of the population) will be affected by it at that time. In fact, it teok from four to six years for the new technology to diffuse to the point that three-quarters of the homes in the Illinois communities had television sets (Cook et al., 1990, p. 567). Yet the dates of the library circulation decreases in Parker's study (1948 and 1953) were years when only 10% to 15% of the homes in the respective groups of communities had TV sets! Thus, it is unclear what process led to the decrease in library circulation Was it accounted for by just 10% to 15% of the population? Or could it have been an anticipatory effect on the part of the remainder: getting ready for TV by reading less? These questions go beyond the observed outcome to ask about the process by which television's arrival affected reading and other community activities.

USING RANDOMIZATION

IN FIELD RESEARCH

The last topic in our discussion of quasi-experiments is: When should one use randomized assignment of subjects to treatment conditions (that is, true experi-ments)? A crucial basic principle here is that randomization should be used whenever possible because it increases the strength of causal inferences (Cook & Shadish, 1994). As we discussed in Chapter 4, the great advantage of true experiments is that randomization equates the initial characteristics of the treatment sub-groups, within specifiable limits of sampling error, and thus allows proper use of the most powerful statistical tests. However, remember that initial equivalence of experimental subgroups does not guarantee their final equivalence at the end of the experiment, because such factors as differential attrition, compensatory equalization of treatments, compensatory rivalry, or demoralization may produce marked discrepancies in final sub-group scores, entirely apart from any effect of the treatment variable. For that reason, checks on the presence of these factors should always be included in experimental research designs.

Randomized experiments have sometimes been criticized as too costly, in both money and time, to be widely used in social research. It is true that correlational research or quasi-experimental studies are apt to be quicker and cheaper than true experiments. How-ever, many authors have insisted that the costs of research are far outweighed by the social costs of being wrong about research conclusions that affect national programs or even local affairs (Cook et al., 1990; Coyle, Boruch, & Turner, 1991; Manski & Garfinkel, 1992). Consider, for instance, the costs to the public of wrong decisions about the approval for sale of a hazardous drug like thalidomide, or about a prison early-release program involving still-dangerous convicts.

Despite the difficulties of conducting randomized experiments in field settings, there is a surprisingly long list of published experiments on social programs in such as education, law, mental health, and employment (Boruch, 1996; Cook & Shadish, 1994). Cook and Campbell (1979) presented a helpful list of types of situations where randomization may be possi ble and acceptable in social research. Among their examples, two fairly common ones are: Lotteries for the equitable distribution of scarce resources (such as new condominiums) or undesirable burdens (such as military service). Assignment of applicants for a social benefit to a treatment group or a waitinglist control group in circumstances where the demand exceeds either the supply (such as new medical techniques like kidney dialysis) or the distribution capacity (such as job-training programs).

Randomization in Quasi-Experimental Studies

There is one other way in which randomization can be useful, even in quasi-experimental studies where the researcher does not have full control over assignment of the treatment conditions. Recall that the definition of quasi-experimental research indicated that the investigator does have control over the timing and methods of measurement. Thus the investigator can randomly assign measurement to part of the total sample at one time and part at another time. One example is the separate-sample pretest-posttest design, which can be diagrammed as follows (R standing for random assignment):

Pretest-posttest group: R 01 X 0,

Posttest-only group: R X 03

Why would anyone want to use such a design? A major circumstance where it would be useful is when the whole population must undergo the treatment (for instance, a year of high school study), but the pretest measurement is reactive so that it affects the later learning of those exposed to it (for instance, a test of American history knowledge). In such a situation, giving the pretest to a random half of the students and only the posttest to the other random half will ensure that a non-reactive pre-post comparison can be made (by comparing the first group's 01 , with the second group's 03 ), which would not be possible in any other common design. Though this design suffers from other threats to validity, they can be largely overcome by the principle of "patching-up" with additional control groups and measurements (Campbell & Stanley, 1966).

SUMMARY

In quasi-experimental research, the investigator does not have full control over manipulation of the independent variable, but does have control over how, when, and for whom the dependent variable is measured. The many quasi-experimental designs are useful when better designs are not possible, and they can often be "patched-up" to avoid most of the threats to valid inference. No single study is ever free from all inferential problems, but in general, quasi-experimental designs are weaker than experiments and stronger than correlational research.

The four major types of validity are: (1) statistical conclusion validity (was there a significant effect?), (2) internal validity (was it a causal effect?), (3) construct validity (what does the finding mean in conceptual terms, or in understanding of the "key ingredient" of a complex measure?), and (4) external validity (ran the finding be generalized to other persons, settings, and times?). The first two deal with whether conclusions can properly be drawn from the particular data at hand, while the last two concern generalization to other conditions. Internal validity is usually the most essential characteristic, for without it other types of validity are meaningless. There are many specific problems in research design that constitute threats to each of these types of validity, and there are many corresponding ways of combatting the problems in order to reach valid conclusions.

Minton's study about the effects of the first year ofthe "Sesame Street" program on kindergarten children's school-readiness test scores illustrates possiblethreats to validity in one major class of quasi-experimental studies: nonequivalent control group designs.

Similarly, Parker's study of how the introduction of television affected public library circulation exempli fies the other main class of quasi-experimental studies: interrupted time-series designs.

Randomized assignment of subjects to treatmentconditions is the great strength of true experimental designs and should be used wherever possible in order to strengthen causal inferences. There are many social situations in which randomized experiments are possible, given careful forethought and planning. Even in quasi experimental designs, randomization can be used very profitably to divide subject groups for different measurement procedures.

SUGGESTED READINGS

Campbell, D. T., & Fiske, D. W.

(1959). Convergent and discriminant validation by the multitrait multimethod matrix. Psychological Bulletin, 56,

81-105.?This early paper is still a useful description of how to determine

construct validity through a pattern of similar and dissimilar

relationships.

Cook, T. D., Campbell, D. T., &

Perrachio, L. (1990). Quasi experimentation. In M. Dunnette & L. Hough

(Eds.), Handbook of industrial and organizational psychology (2nd ed., Vol. 1, pp. 491-576). Palo Alto,

CA: Consulting Psychologists Press.?The most recent comprehensive overview of

the four kinds of validity, the many types of quasi-experimental designs, and the

typical threats to validity of each design.

Mark, M. M., Sanna, L. J., & Shotland,

R. L. (1992). Time series methods in applied social research. In F. B. Bryant,

J. Edwards, S. Tindale, E. Posavac, L. Heath, E. Hender son, & Y.

Suarez-Balcazar (Eds.), Methodological issues in applied social psychology:

Social psychological applications to social issues (pp. 111-133). New York:

Plenum.

Ross, A., & Grant, M. (1994).

Experimental and nonexperi mental designs in social psychology. Madison,

WI: Brown & Benchmark.?This short book gives an intro duction to

experimental design and an overview of the strengths and threats to validity of

many research designs.

Komentar

Posting Komentar